This essay was originally published in 2018 on Medium. (link)

Big Data

We live in an age of scale. Of trillion dollar companies. Of social media followers measured in the hundreds of millions. Of efficiency, consumption, and greed being the greatest of our goals and ideals. And it is into this context that emerges a new technology: Artificial Intelligence.

AI seems perfectly aligned with our current moment, for it is built on the idea of scale — AI requires huge, almost unimaginable, amounts of data to learn and function. These are quantities of data that only our largest companies can provide, for they are the ones who see and capture everything we do.

Embedded within this data are the beliefs and biases present in our culture. And as a result we develop, not entirely intentionally, AI applications that further accelerate and optimize the scales, and inequalities, already in place.

Is this future inevitable? Can only our largest organizations, with their vast data sets, decide how we will use AI?

What if, instead, we could start small? To work at the scale of the personal. To engage directly with AI. Could doing so allow us to develop new intuitions and understandings of what the technology is, and what it could enable?

It is from this perspective that I approach my work. Instead of scale and efficiency, I ask: Can aesthetic experiences give a fresh start to how we think about AI? Can beauty be a basis from which we imagine new possibilities for AI?

Making Images

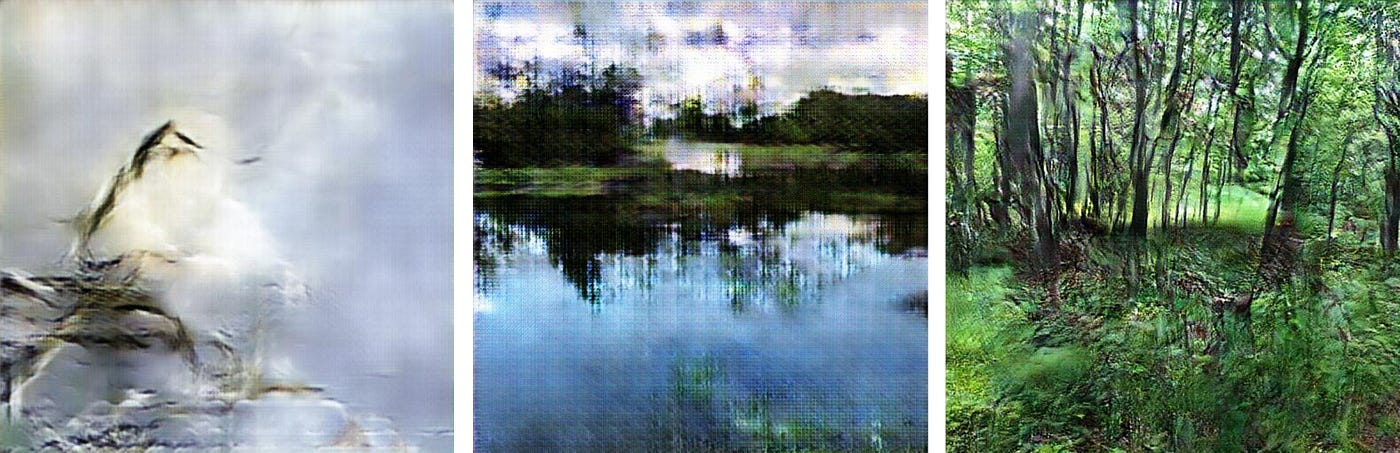

The work, titled “Learning Nature,” is built upon an AI machine learning technology known as Generative Adversarial Networks — or GANs.

A GAN is made up of two parts, which have an “adversarial” relationship. The first part, the discriminator, is trained on a set of source images. Over time it develops an understanding of what those images represent.

The second part of the GAN is the generator. It creates images from scratch and presents them to the discriminator, hoping that they will be mistaken as source images.

At first the generator isn’t very good. It creates little more than static and the discriminator rejects the images. But, over time, it improves. Eventually it learns enough to trick the discriminator: it is able to generate images that are recognized being equivalent to, or as real as, the source images. At least to the degree that our discriminator understands them.

This approach, a system made up of two distinct entities, is an ideal platform to explore what it means for something to be an AI, and the artist’s relationship to it. Am I continuing the artistic tradition of using creative tools — just one which is more advanced than any previous ones? Or am I a teacher, helping it to learn and then create on its own? Am I the generator or the discriminator?

Intelligence

In order to train the system I photographed a range of subjects, creating intentionally small sets of related images. I wanted to see if different environments and subjects might reveal how the code works, or what sort of intelligence developed. For example, how might teaching it with carefully composed images be different from a hodgepodge of snapshots? What qualities from those images might be learned, and then expressed, by the system?

This work is set in the rural context of upstate New York and the domain of nature. I chose this, not only to emphasize its difference from mainstream AI, but to place it in the area’s rich creative history. Almost two hundred years ago the Hudson River School used painting to express man’s relationship to nature. What would it mean for an AI today to understand and interpret that same nature?

As the machine began to create, I wasn’t sure what to expect. Was I going to get unintelligible noise? Or perhaps something vaguely clinical? But, very quickly, there emerged a gentle ambiguity that was remarkably seductive. It was a surprise to see that the work exhibited a strange beauty.

The beauty is useful for it draws us in. We want to understand what we’re seeing. We know that there’s not a human “intelligence” in the code, but still we anthropomorphize — we use human attributes and metaphors to try to explain and understand its actions.

As each image begins, highly pixelated and full of digital artifacts, there is a distinct impressionistic quality. We can easily imagine features that may or not be there. We perceive the system as “trying” to give form by using its nascent intelligence. We want to believe that the image will eventually be resolved.

Yet, as the system works, we see areas where it struggles for that resolution. Like an artist working and reworking their canvas we see how, through repetition, “ideas” are tried again and again. Each, then, either further refined or rejected.

But parts of the image never get beyond the pixelated, or become something nonsensical. The system is unable to develop the knowledge required to complete those details. Its digital and machine nature is revealed — its limited training has rendered it unable to fully grasp the organic nature of its subject.

And there need be no end to the process. It could run forever, for there can always be another iteration. It’s only through the human intervention, to stop the system, that it is finished.

The “intelligence” here is neither a human, nor a living, form of intelligence. Our “little AI,” as we train it with different subjects, doesn’t learn across those different experiences. Each time it starts from nothing, learning as it goes, with a singular focus — flowers, or trees, or a building. No understanding of the larger world develops. It’s a program run and then discarded.

And yet we cannot deny the human intelligence used in how I choose to teach the system, and how we interpret the results. And in both places our human biases are revealed. Do we see greater beauty in the images trained on cut-flower pictures? Is there really a claustrophobic quality to the images of the woods? We begin to recognize that those traits were already present in the training images.

Art in the age of…

Machines have been helping humans make art for almost as long as humans have been making art. While early machines were merely artist tools, as we moved into the modern era theorists and art historians increasingly began to hold an anxiety around the role of machines in the practice of making art. This anxiety began with the mass reproduction that came out of the invention of the printing press, and became more acute with the advent of photography.

In an era where critics were unconvinced by photography, and photographers tried to mimic painterly styles, Walter Benjamin argued for something different. In his essay “Art in the Age of Mechanical Reproduction” Benjamin made the case for embracing the machine-enabled art form and the aesthetics that are derived from it.

How then should we feel about the role of AI in today’s world of art? Does the machine still bend to the will of the artist, or does it replace the artist all together?

What I’ve discovered, through the process of helping the machine learn nature, is that it is indeed a symbiotic process. The “artist” must tune the imagery that’s put into the “machine” to craft its interpretation of nature. And the artist must continue to select the work that the machine creates (much like photographers would use a contact sheet) in order to make the most unique, and frankly beautiful, interpretation of nature.

Nothing that emerges is accurate, but the work isn’t asking for accuracy — it’s asking for the machine to build its own unique vision of the natural world. The misinterpretation is a piece of the work. Just as theorists have argued that the entire history of culture has been interpretation and misinterpretation of the cultural movements that preceded it, so too this work embraces the misinterpretation of nature by machine.

This process is not dissimilar to that used by both Hudson River School and Audubon artists. In each, the artists used elements from multiple scenes, or birds, to create their final composite image. Their images show something which doesn’t exist in the real world, but that captures their understanding of the subject.

It shouldn’t surprise us then, that as we look at the work, we find it begins to reflect patterns from those great landscape painters. We see hints of Dutch Master flower paintings in the ethereal flowers the machine builds, and echoes of Audubon in portraits of ghostly birds both there and seemingly blown apart by a sudden wind. All begin to emerge as I select for the images that most reflect the interpretive bonds we have with nature. We’re anxious, yes, but we are anxious because we want to deny that the work speaks to some inner sense of landscape and beauty that we cannot resist.

Embracing Beauty

We want to reject the work. It’s kind-of like a photo, but is it? The machine is just trying to copy something, isn’t it? It doesn’t really know what it’s making, does it?

But those questions are wrong. It’s not a photo, it’s a unique interpretation of its subject. It’s not trying to copy, it’s trying to learn. And to the last question, we don’t know if it knows. We can’t, as of yet, know.

The desire to reject captures our own anxiety about the utterly new roles AI is beginning to play in our lives. It is because of this desire that I have carefully selected what is fed into the system and, likewise, edited the results. Slowly I built intelligences of landscape and nature that in some way connect to the way we have learned to appreciate landscape and nature.

This confusion of intelligence and intent makes us hate the work while we are drawn to it.

AI forces us to reconsider what we mean by intelligence and its endless forms. Through this work I have come to realize that AI is not an all-knowing super intelligence. But it is a new tool, and with it come issues and considerations much the same as tools from earlier eras.

We should never accept new technologies purely for their novelty. So, too, we should reject them if they reinforce existing, or create new, inequalities. But if they give us new ways to experience and understand the world around us, then they’re enabling new ways for us to imagine our future.

Using AI in the service of art may be a small step, but if beauty can be used to make AI more approachable, then we’re a step towards building a more diverse and just future.

All images in this post are © 2018 David Young